If you read our last software blog, Challenges of Independent Verification & Validation of Intelligent Systems, you might have noticed that it seemed a little more…robotic than usual.

That’s because we let ChatGPT write it for us.

What is ChatGPT?

ChatGPT is a new AI tool that allows users to basically talk to a robot. But it’s much more than just a simple word generator—ChatGPT has the ability to write songs and essays, answer test questions, write and debug computer programs, and even play tic-tac-toe. And it’s taken the world by storm, amassing over one million users in the first few days it was made public.

This type of technology is not new (ChatGPT, for example, is a new version of a product called InstructGPT that was released in 2015), but it is regarded as the most powerful AI language model ever released to the public. And it’s raising concerns from academia to business about the tool’s ability to plagiarize essays, create phishing emails, and replacing workers in positions like sales, marketing, and customer service.

Are those concerns valid?

ChatGPT’s immense knowledge base makes it an alluring tool for tech-savvy students looking to have their homework done for them. To an untrained eye, the tool creates high quality work—good enough to pass the bar, medical school exams, and even the final for Wharton’s MBA program. ChatGPT’s ability to not just create content, but also tailor that content and make iterations when given specific instructions, makes it hard to separate human-written from AI-generated text.

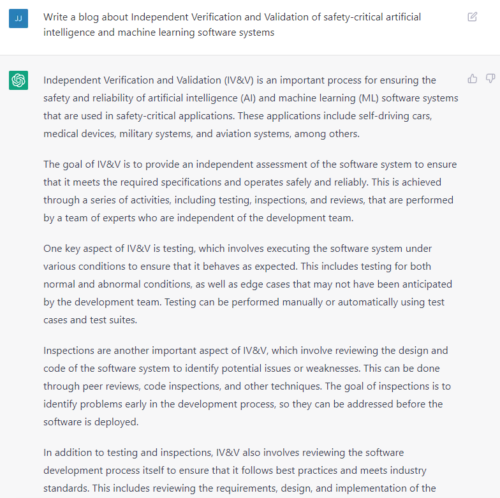

Our initial conversation with ChatGPT

For example, in creating our blog last month, we first asked ChatGPT, “Write a blog about Independent Verification and Validation of safety-critical artificial intelligence and machine learning software systems.” The content, while thorough and informative, was not incredibly interesting, as it was just a canned description of what IV&V is. So then we asked, “Rewrite it focusing on the challenges involved with the verification and validation of intelligent systems,” which produced a more engaging piece. Then we said “Now integrate those two into a complete post,” and voila! Blog done.

Was it a great blog? Not really. If you read it not knowing it was a computer, you’d probably think it was just an average writer producing pretty good content. But on further inspection, you can see the chinks in the armor. Despite being a blog, the text is more polished and direct than colloquial and human. Removing the “Independent” from my second prompt created misalignment, with the tool not sure whether to refer to it as “V&V” or “IV&V.” The pictures and headers, I still had to create myself. With refinement, we probably could have tweaked the blog further.

So, are humans safe from the robots?

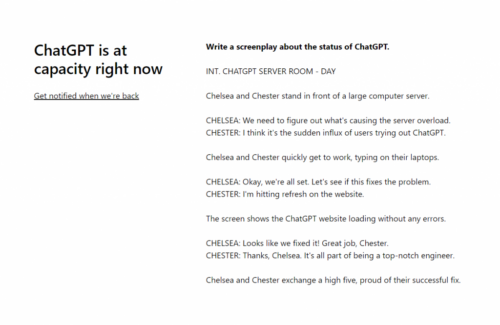

ChatGPT is very popular—causing it to be often inaccessible due to server constraints

The thing about an AI language model like ChatGPT is that at the end of the day, you still need a person there to prompt the tool and steer whatever you’re using it to build in an optimal direction. That’s why ChatGPT is a tool, but not a replacement, for most functions. Concerns about plagiarism have sparked developers to create their own tools to scan work for AI-generated content, and parent company OpenAI’s intention to eventually charge for ChatGPT will likely quell the influx of college kids having robots do their homework.

Even if it was capable of truly simulating a human—you would still need a human to guide the tool, meaning it won’t ever really replace workers, it will only ever empower them to perform even more efficiently by leveraging new technology. Just like we always have.

ChatGPT is very popular—causing it to be often inaccessible due to server constraints